Submission

Note:

Now Evaluation Set Phase: 2023.11.14 — 2023.12.14 2023.12.21 (AOE)

1. Due to the

limits of codalab, you have to participate in a new competition on codalab

for the evaluation phase leaderboard. The results of the baseline system on the evaluation set have been published on github.

2. Follow the steps mentioned below, make sure to register on CodaLab using the same institutional email address you used when registering in the MISP 2023 challenge.

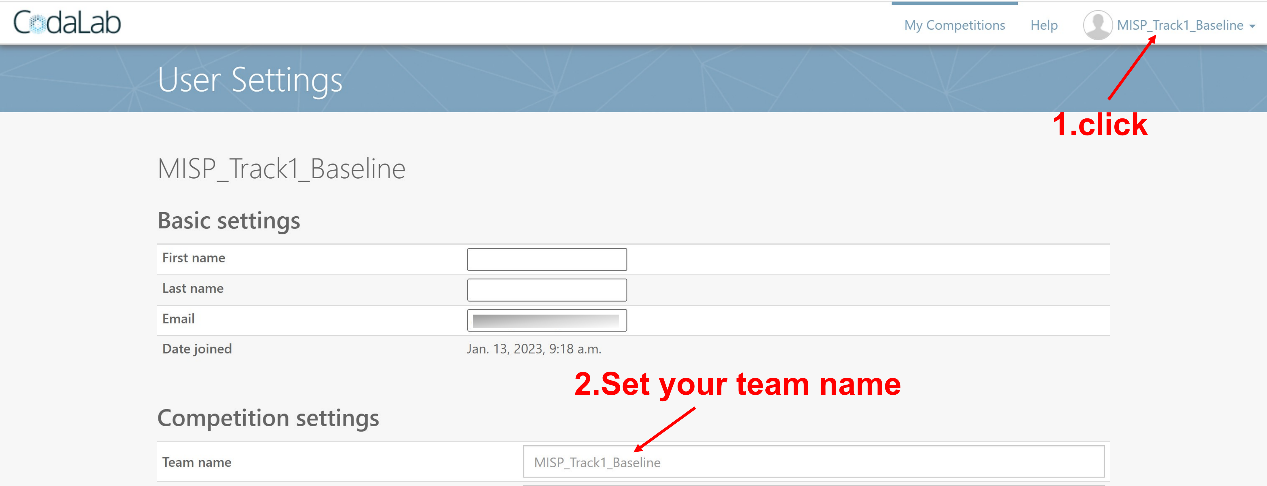

3. Then, set your team name on CodaLab,and make sure it's the same as the one that you use to register in the MISP 2023 challenge, and then send applications to participate.

- STEP 1: Register on codalab

note: make sure to use the same e-mail address and teamname in the MISP 2023 challenge and CodaLab, or you may not be allowed to submit the results in the competition. If you'd like to change e-mail address, please contact with organizers.

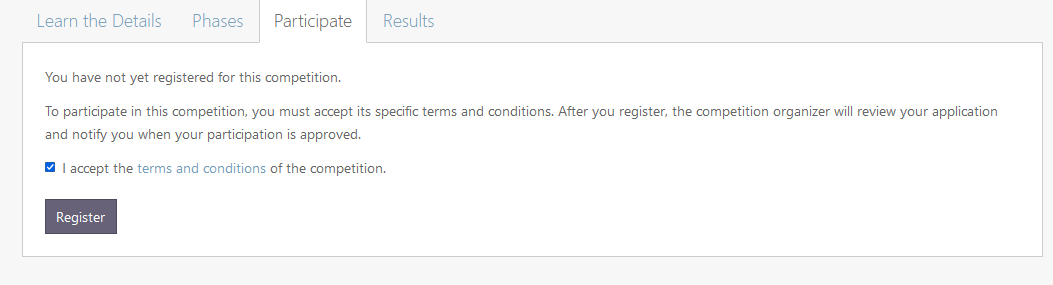

- STEP 2: Apply to join in the competition and wait for approval.

tips: just check the box ( you don't need to download the term as you have signed it when you register in MISP 2023 challenge ), and we'll permit access at that night.

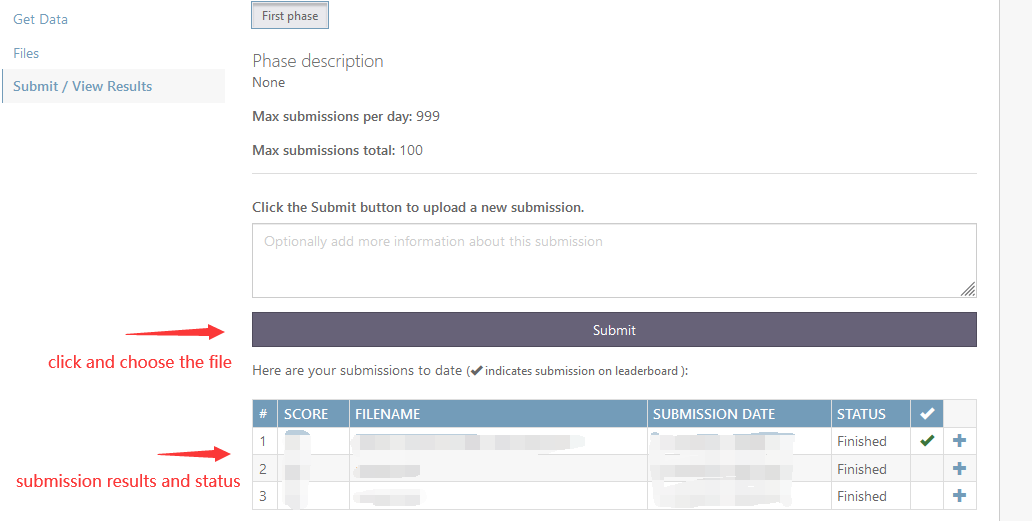

- STEP 3: Submit your result and check the scores

- Please note that due to platform limitations, we are not able to test audio directly. Therefore, you need to decode the audio yourself and obtain the transcribed text based on the ASR backend system we provide in the baseline system. Please follow the instructions in Baseline System to decode the speech using the provided ASR backend. Then, submit the text to CodaLab, and the platform will calculate the CER score for it. In order to ensure fairness and eliminate the possibility of cheating, before announcing the results of the challenge, we will ask you to submit the audio file corresponding to the highest score (the submission method is to be determined). We will decode the audio and calculate the CER. If there is a significant difference between the final CER score and the score displayed on the CodaLab platform, the applicant will be disqualified.

Submit transcribed text to CodaLab,make sure your submitted zip file as following directory structure:

|submission.zip

||--submission.txt

Make sure each row of the transcript in the following format, which is a key-word separated by a space character:

segment_id 文字文字文字 (only Chinese characters included in the text part without any punctuation)

Here is a submission example, and the whole segment_id list for the evaluation set. Note the number of segments in ground truth is smaller than those directly extracted from the transcript(.textgrid files), since some of the segments labeled in .textgrid files lack relevant audio or video segments. Therefore, the segment_id in the text you submit needs to be consistent with segment_id list. The segment_id list corresponds to the segments file we provide. In order to prepare the corresponding audio or video segments based on the segments file, you can visit the github website of baseline, view and modify: simulation/gss_main/gss_main/prepare_gss_dev.sh.

When the "Status" in the submission table turns to "Finished", you can refresh the page the check the score (CER) below. You can also click the button named “View scoring output log” to check if you miss any segments in your submission.

- Other information

Make sure a. your submitted file, includes all segments in the evaluation set. b. your submission file ia in required format, or the submission will fail.

For each submitted score, it will be directly on the leader board if it's your best score so far.

During the evaluation set phase, every group is allowed to 5 times per day. Due to the problem of CodaLab, there is a small probability that the result will not be obtained for a long time. Please wait patiently or submit at another time.

If you meet any problems during submission, please contact us.