Background & Task Overview

With the emergence of many speech-enable applications, the scenarios (e.g., home and meeting) are becoming increasingly challenging due to the factors of adverse acoustic environments (far-field audio, background noises, and reverberations) and conversational multi-speaker interactions with a large portion of speech overlaps. The state-of-the-art speech processing techniques based on the single audio modality encounter the performance bottlenecks, e.g., yielding the word error rate of about 40% in CHiME-6 dinner party scenario. Motivated by this, the MISP challenge aims to tackle these problems by introducing additional modality information (such as video or text), yielding better environmental and speaker robustness in realistic applications.

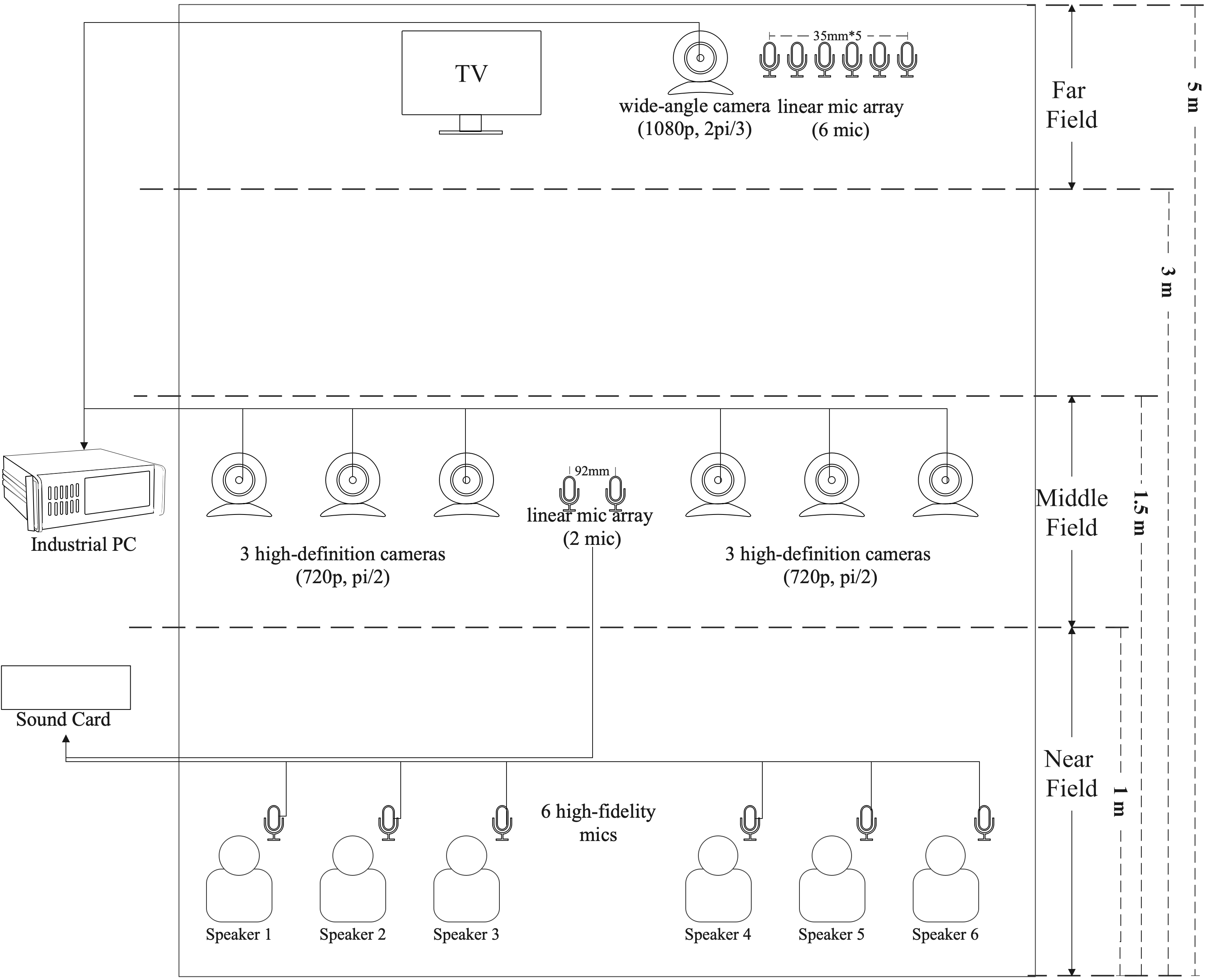

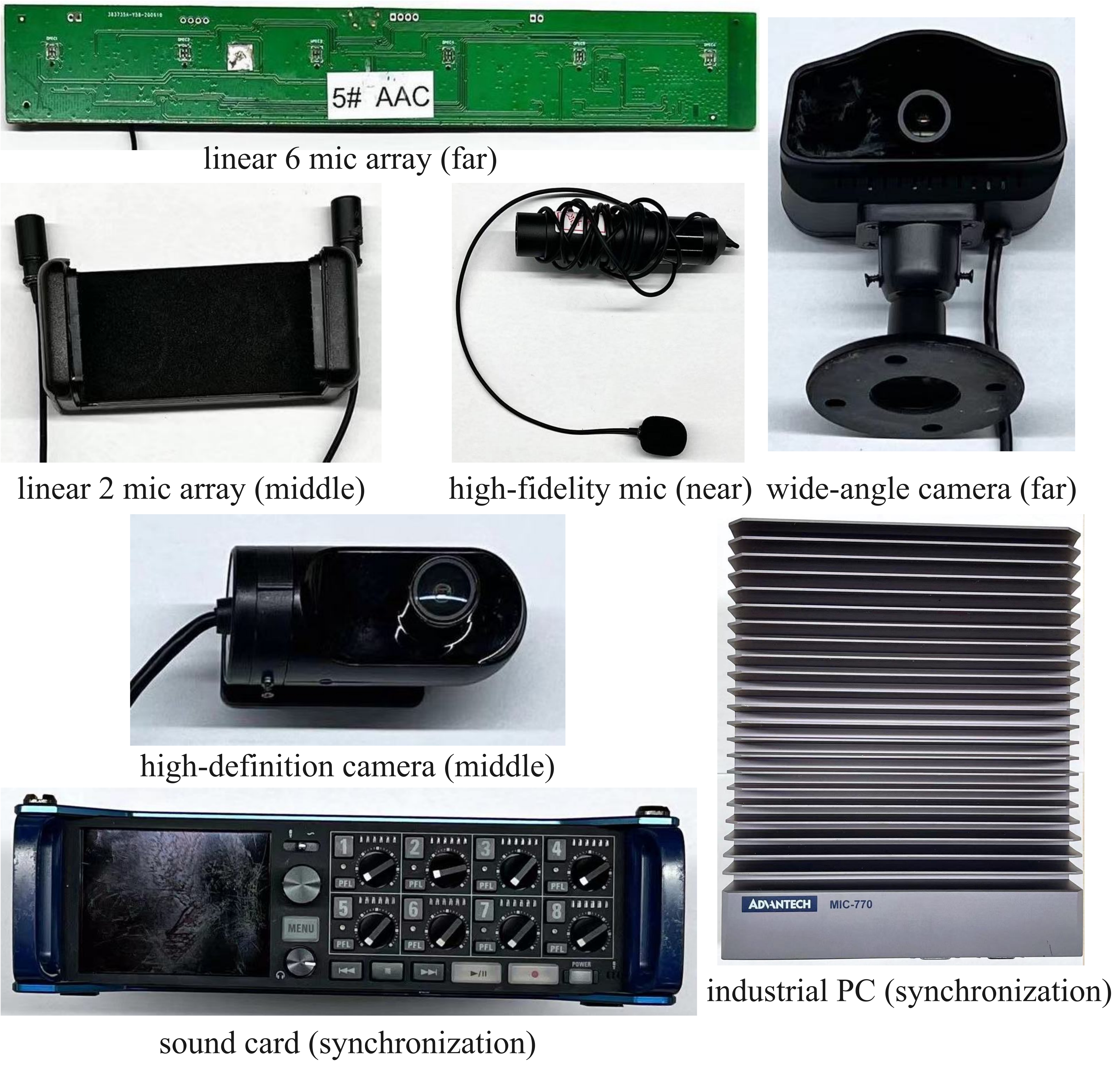

For the first MISP challenge, we target the home TV scenario, where several people are chatting in Chinese while watching TV in the living room and they can interact with a smart speaker/TV. As the new features, the carefully selected far-field/mid-field/near-field microphone arrays and cameras are arranged to collect both audio and video data, respectively. Also the time synchronizations among different microphone arrays and video cameras are well designed for conducting the research on the multi-modality fusion. The challenge considers the problem of distant multi-microphone conversational audio-visual wake-up and audio-visual speech recognition in everyday home environments. How to leverage on both audio and video data to improve the environmental robustness is quite interesting. The researchers from both academia and industry are warmly welcome to work on our two audio-visual tasks (with details as below) for promoting the research of speech processing using multimodal information to cross the practical threshold of realistic applications in challenging scenarios. All approaches are encouraged, whether they are emerging or established, and whether they rely on signal processing or machine learning.