Track2 Datasets

The challenge uses the MISP2022 dataset which contains 100+ hours of audio-visual data. The dataset includes 320+ sessions. Each session consists of a about 20-minutes discussion. The reason why we select an about 20-min duration rather than a longer recording for a session is that we want to alleviate the clock drift problem for synchronizing multiple devices.

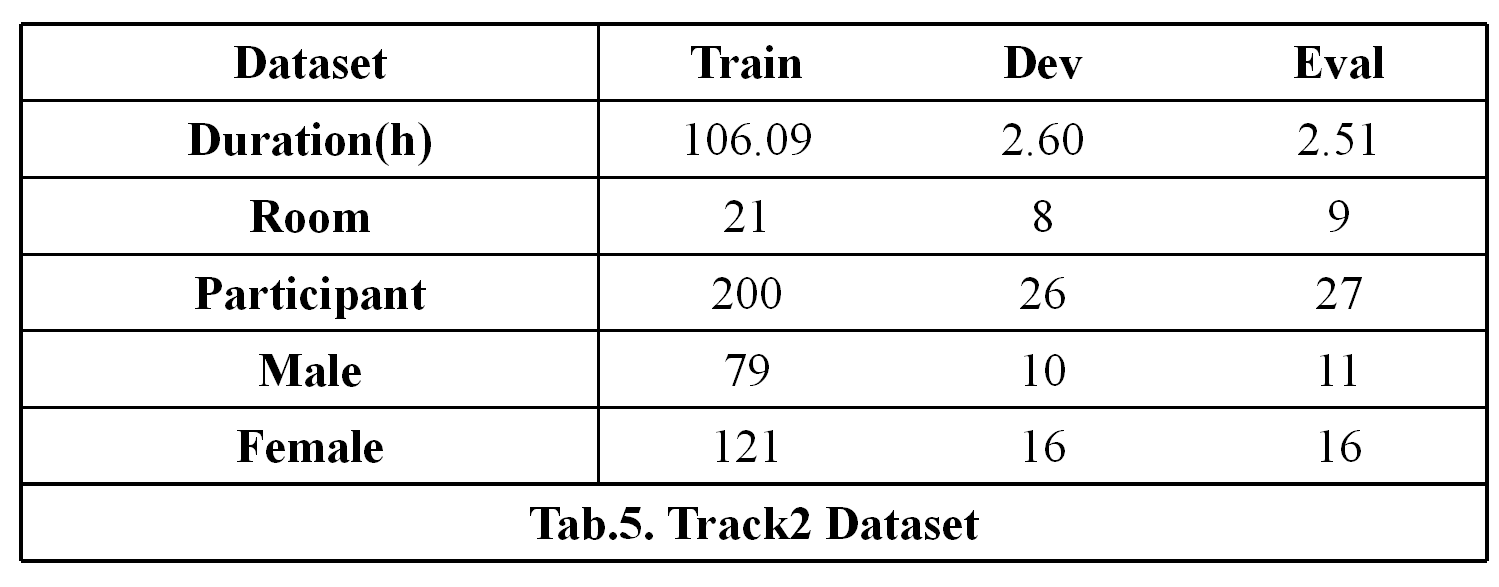

The data have been split into training, development test and evaluation test sets as follows.

There isn’t duplicate speaker and room in training, development test and evaluation test sets.For training and development sets, the released video includes the far scenario and the middle scenario while the released audio includes the far scenario, the middle scenario and the near scenario. But for the evaluation set, we only release the video in far scenario and the audio in far scenario during the challenge.

The release of the evaluation set will use the same directory structure and file name rules as the development set. We will provide an oracle speech segmentation timestamp file with each line of content < start_time > < end_time > speech. Each line of the final submitted text file is < speaker ID >_< session ID >

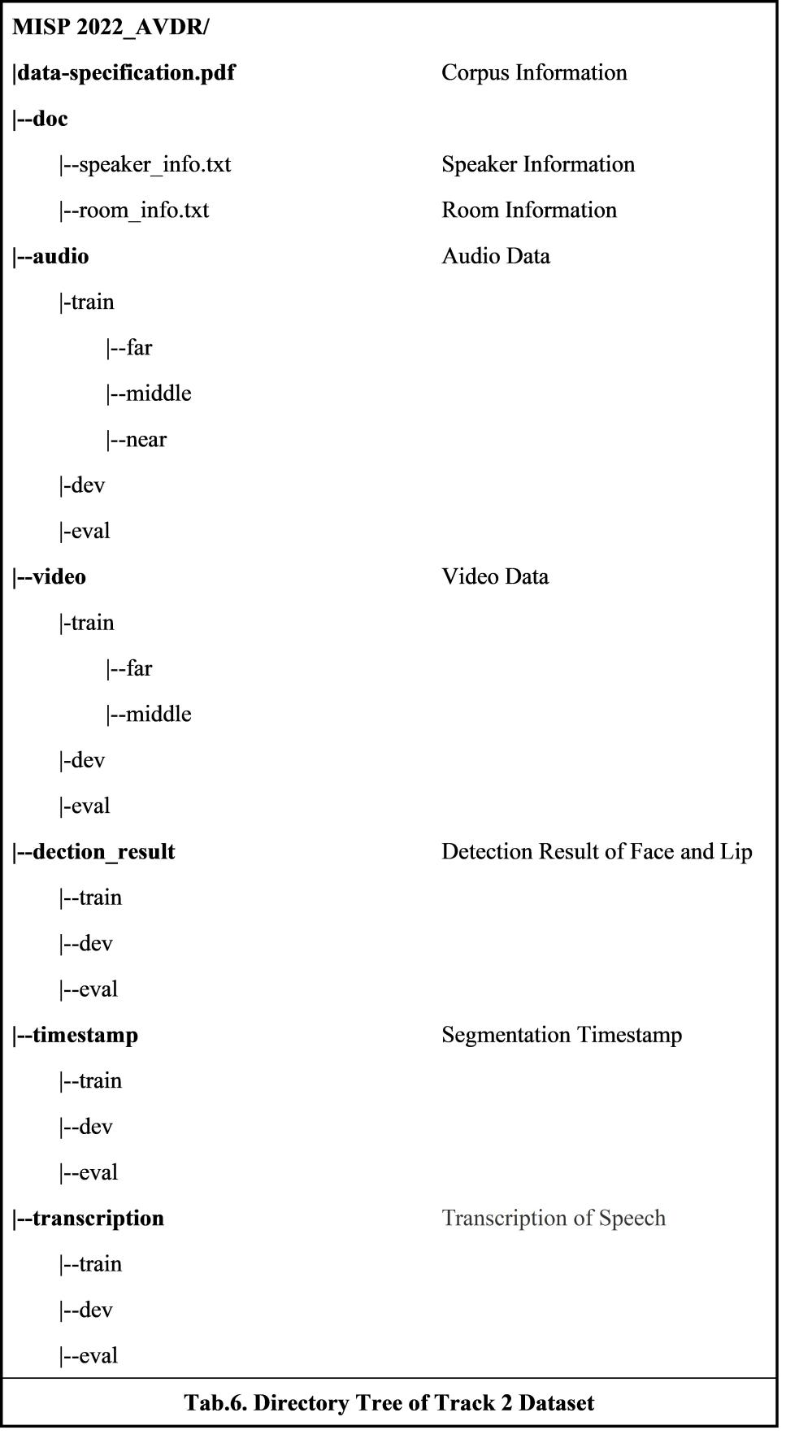

Directory Structure

There is a pdf file in the top-level directory, called data-specification.pdf, which describes the introduction of the data set. There are two txt files in the “doc” folder, speaker_info.txt describes the gender and age range of every speaker, and room_info.txt describes the size of every room. We also provide checkboard images for each far wide-angle camera as well as computed camera intrinsic params so that such distortions can be undone. There are two far wide-angle cameras used in recording. The checkboard images of the far wide-angle camera used in the room which index is greater than or equal to 50 are store in the “checkboard_images/room_ge_50” folder while the images which index is less than 50 are store in the “checkboard_images/room_lt_50” folder.

The audio data, video data and the transcriptions follow this directory structure. Each audio/video/transcription directory has subdirectories for training, development, and evaluation sets. Each train/dev/eval directory has subdirectories for far, middle, and near field recording device in the audio directory. Each train/dev/eval directory has subdirectories for far and middle field recording device in the video directory.

Audio

All audio data are distributed as WAV files with a sampling rate of 16 kHz. Each session consists of the recordings made by the far-field linear microphone array with 6 microphones, the middle-field linear microphone array with 2 microphones and the near-field high-fidelity microphones worn by each participant. These WAV files are named as follows: Far-field array microphone: < Room ID >_< Speakers IDs >_< Configuration ID >_< Index > _Far_< Channel ID >.wav Middle-field array microphone: < Room ID >_< Speakers IDs >_< Configuration ID >_< Index > _Middle_< Channel ID >.wav Near-field high-fidelity microphone: < Room ID >_< Speakers IDs >_< Configuration ID >_< Index > _Near_< Speaker ID >.wav

Video

All video data are distributed as MP4 files with a frame rate of 25 fps. Each session consists of the recordings made by the far-field wide-angle camera and the middle-field high-definition cameras worn by each participant. These MP4 files are named as follows: Far-field wide-angle camera (1080p): < Room ID >_< Speakers IDs >_< Configuration ID >_< Index > _Far.mp4 Middle-field high-definition camera (720p): < Room ID >_< Speakers IDs >_< Configuration ID >_< Index > _Middle_< Speaker ID >.mp4

The transcriptions are provided in TextGrid format for each speaker in each session. We use speech signal recorded by the near-field high-fidelity microphone to manual transcription. After manual rechecking, the transcription accuracy rate is as high as 99% or more. The TextGrid files are named as < Room ID >_< Speakers IDs >_< Configuration ID >_< Index > _Near_< Speaker ID >.TextGrid. The TextGrid file includes the following pieces of information for each utterance:

- Start time ("start_time")

- End time ("end_time")

- Transcription ("Chinese characters")

In order to cover the real scene comprehensively and evenly, we designed the following recording configuration by controlling variables as in Table 1.

| Config ID | Time | Content | Light | TV | Group | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| 00 | Day | Wake word & Similar words | off | on | 1 | ||||||

| 01 | Talk freely | off | on | 1 | |||||||

| 02 | on | on | 1 | ||||||||

| 03 | off | off | 2 | ||||||||

| 04 | on | off | 2 | ||||||||

| 05 | off | on | 2 | ||||||||

| 06 | on | on | 2 | ||||||||

| 07 | on | off | 1 | ||||||||

| 08 | off | off | 1 | ||||||||

| 09 | Night | on | on | 1 | |||||||

| 10 | on | off | 2 | ||||||||

| 11 | on | on | 2 | ||||||||

| 12 | on | off | 1 | ||||||||

| Tab.5. Configuration | |||||||||||

"Time" refers to the recording time, the value is day or night. "Content" refers to the speaking content. We also recorded some data only containing wake-up/similar word to support audio-visual voice wake-up task. "Light" refers to turning on/off the light. "TV" refers to turning on/off the TV. "Group" refers to how much groups of participants in a conversation. By observing the real conversations these were taking place in real living room, we found that the participants would be divided into several groups to discuss different topics. Compared with all participants discussing the same topic, grouping would result in higher overlap ratios. We found that average speech overlap ratios of 𝐺𝑟𝑜𝑢𝑝 = 1 and 𝐺𝑟𝑜𝑢𝑝 = 2 are 10%~20% and 50%~70%, respectively. And the number of groups greater than 3 is very rare when the number of participants is no more than 6.